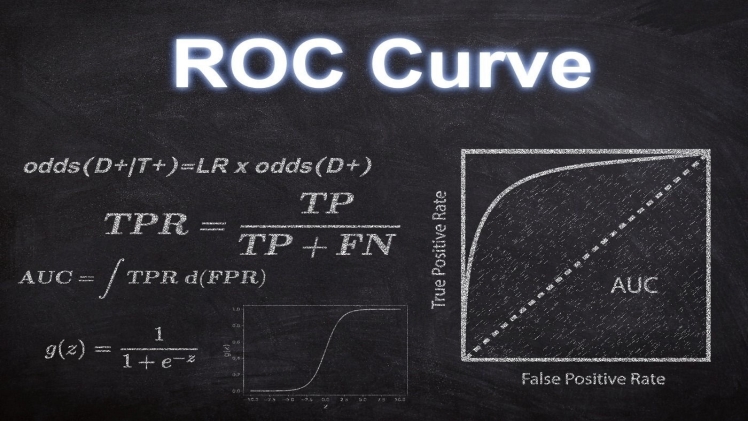

Evaluation of results is essential work in machine learning. Area under the ROC (AUC-ROC) is a useful metric to use when attempting to solve a classification problem. When evaluating and visualising the efficacy of a solution to a multi-class classification problem, we often resort to the Area Under the Curve (AUC) ROC (Receiver Operating Characteristics) curve. It is one of the most important metrics for evaluating the success of a classification model. Area under the receiver operating characteristics (or AUROC) is another way to describe this.

What does the term “AUC – ROC Curve” refer to?

For classification problems involving several thresholds, the area under the receiver operating characteristic curve (AUC-ROC) is a useful performance measure. The area under the curve (AUC) is a measure of separability and the ROC is a probability curve. How well the model separates classes is represented by this metric. Area under the curve (AUC) measures how well a model predicts whether a given class will have a value of 0 or 1. A greater auc curve, for instance, suggests that the model is more able to distinguish between people with and without the ailment.

Can any assumptions be made about the model’s accuracy?

The AUC of a good model should be close to 1, since this implies high separability. A low AUC implies that the model is not very good at separating the variables. This suggests that it evolved from mutual help and support. It’s assuming that all 0s will become 1s and all 1s will become 0s. When the AUC is 0.5, there is no possibility of class separation using the model.

Let’s see if we can make sense of the foregoing assertions.

Known Operating Characteristic (ROC) is a probability curve. Let’s have a peek at how those probabilities are spread out, shall we? Patients who have the disease are represented by the red distribution curve, while those who do not have the condition are represented by the green distribution curve.

The current situation is ideal. When the two curves in question have zero overlap, we know that the model is highly separable. It is fully capable of distinguishing between positive and negative categories.

If we have two distributions that overlap, we make both type 1 and type 2 errors. The threshold determines whether we may decrease them or increase them. If the model has a 70% chance of correctly distinguishing between the positive and negative classes, then the area under the curve (AUC) is equal to 0.7.

This is the worst possible situation. As the area under the curve (AUC) approaches 0.5, the model’s ability to distinguish between the positive and negative classes begins to deteriorate.

When the area under the curve (AUC) is minimal, it indicates that the model successfully reflects the classes. This means that the model is misinterpreting a negative class as a positive class, or vice versa.

When assessing data from several classes, how should one make use of the AUC ROC curve?

The One vs. ALL method allows us to see the AUC ROC Curves for all N classes in a multi-class model simultaneously. As an example, if you have three classes labelled X, Y, and Z, you’ll have three ROCs: one for X that is categorised against Y and Z, one for Y that is categorised against X and Z, and one for Z that is categorised against Y and X.